Databases provide the backbone for almost every application and system we rely on, acting like a digital filing system for storing and retrieving essential information. Whether it’s organizing customer data in a CRM or handling transactions in a banking system, an efficient database is crucial for a smooth user experience.

However, when we get into large volumes of data and more complex queries, database management can become daunting. That’s where good indexing strategies can make all the difference.

Think of it like tidying up your digital filing cabinet so you can quickly find what you need without rummaging through multiple drawers and folders to locate the correct file.

By organizing and structuring your data in a way that facilitates quick retrieval, indexing can make a big difference in how well your database performs. Here, we’ll explore some strategies to help you do just that.

Database indexing best practices

Before you settle on a strategy, it’s worth understanding the different ways you can approach indexing to improve query selection and overall database performance.

Identify key queries and columns

Before getting started with indexing, you need to identify the type of queries your application is running regularly and which columns are involved in those queries. This helps you to focus your efforts on areas that will give the best results. There’s no point in spending time and energy indexing columns that rarely get used.

For example, let’s say you’re developing an app for an online bookstore, and one of the most common queries is searching for books by author name. In this case, creating an index on the “author” column can dramatically improve the performance of search queries.

Data orchestration tools can examine query patterns and usage statistics to pinpoint the most commonly executed queries in your database. What is orchestration, we hear you ask.

When we talk about data, orchestration is the process of managing and coordinating various tasks like collecting, processing, and analyzing data from different sources. This helps to keep data operations well-organized and efficient.

By understanding which queries are commonly used, database administrators can prioritize indexing efforts on the columns involved in these queries.

Avoid over-indexing

While indexing can undoubtedly speed up query performance, as the saying goes, you can have too much of a good thing.

Over-indexing isn’t just a waste of time, it can actually have the opposite desired effect and hinder database performance.

Keep in mind that every index you add takes up storage space and needs managing within the database. Plus, having too many indexes in play can slow down your insert and update performance because your database will be working overtime to update multiple indexes with every change.

To avoid this, follow data indexing best practices such as those covered in Apache Hive documentation. Aim to strike a balance between query performance and keeping the database easy to manage.

Focus on indexing columns that are frequently used in WHERE clauses, JOIN conditions, and ORDER BY clauses. Also, think about using composite indexes for queries that involve multiple columns.

Regularly monitor and tune indexes

Creating indexes isn’t one of those jobs you can do once and forget about. Because data and query patterns often evolve over time, you need to regularly check and adjust them.

It’s similar to the practices of Machine Learning Ops (MLOps), where ongoing monitoring ensures the model is still effective. Similarly, consistently reviewing and fine-tuning indexes plays a pivotal role in managing their effectiveness.

Failure to do so can lead to accumulating technical debt, where outdated or inefficient indexes accumulate over time, resulting in degraded performance and increased maintenance overhead.

Use SQL tools like MySQL’s EXPLAIN or Microsoft SQL Server’s Query Execution Plan. These will give you a solid view of how queries are being executed and which indexes are well utilized. You can then more easily see where to add missing indexes and remove ones you no longer need. It also helps you spot opportunities to update existing ones to better suit query patterns.

Let’s look at what that means in practice. Suppose you notice a particular query performing poorly despite having an index. Upon closer inspection, you discover that the index’s cardinality (i.e. uniqueness) is low, leading to poor selectivity. In this case, modifying the index or adding additional columns to improve selectivity could significantly boost that query’s performance.

Consider using covering indexes

A covering index includes all the columns necessary to fulfill a query. This means that the database doesn’t need to keep accessing the underlying table.

To return to our filing cabinet analogy, you can think of it as having the right folders set out in front of you so you don’t have to search through the entire cabinet to find what you need. Using covering indexes can speed up search queries by reducing the number of overall disk I/O operations.

For example, consider a call center analytics software that logs details of each customer interaction. This might include data such as:

- Caller ID

- Call duration

- Call timestamp

- Outcome

If you’re frequently running reports on the total duration of calls, creating a covering index on the caller ID and call duration fields can optimize query performance. This allows the software to retrieve call duration information directly from the index without having to repeatedly access the main call log table.

Monitor and manage index fragmentation

Index fragmentation occurs when the logical sequence of index pages is not in sync with the physical arrangement. This can make data storage less efficient and slow down search queries. It’s like a library’s card catalog not matching the actual locations of the books on the shelves.

If you don’t catch this and fix it, the problem will only get worse as more data is added or updated. It’s essential to keep a close eye on your indexes and tidy them up regularly.

One solution is containerization, which provides a structured environment for managing databases. Most modern systems also offer tools for detecting and addressing index fragmentation like rebuilding or reorganizing indexes to help with this.

8 database indexing strategies to try

Not all indexing strategies are created equal. When it comes to finding the best indexing strategy for your database, you need to consider a few things, including:

- What type of data you’re working with

- Which queries you run often

- What performance goals you want to achieve

With that in mind, here are a few examples of indexing strategies for different situations.

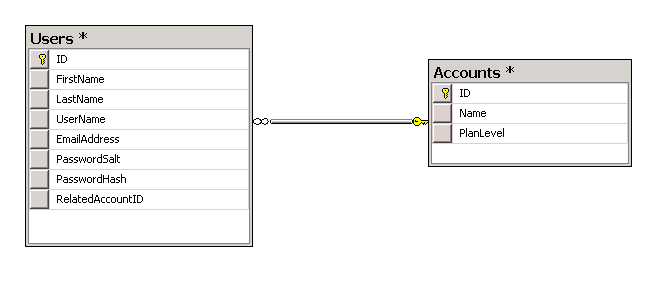

1. Single-column indexes

Single-column indexes work well for databases with tables containing a large number of rows and where queries frequently filter or sort data based on a single column. For instance, if you’re regularly looking up users by their usernames, create an index for the “username” column in the user table for faster retrieval.

2. Composite indexes

If your common queries involve columns in a WHERE clause or involve ORDER BY and GROUP BY operations on multiple columns, composite indexes might be more useful. For example, if you have a sales database where you’re frequently searching for sales by date and location together, you can create an index for both the “date” and “location” columns.

3. Unique indexes

These ensure data integrity by enforcing uniqueness on one or more columns. They are beneficial for columns that should not contain duplicate values, such as primary keys or email addresses in a user table.

4. Clustered indexes

Some databases feature rows that are physically stored in order based on the index key. In these cases, clustered indexes can improve the performance of range queries or sequential scans. For example, if you organize time-series data by date, clustering the primary key will make it quicker to find information chronologically.

5. Covering indexes

These indexes contain all necessary information for answering a query so the database doesn’t have to revert to the original data table. They’re helpful for queries with SELECT, JOIN, and WHERE clauses.

This can significantly improve query performance, especially in scenarios where you might need to generate data-driven insights from complex queries that involve multiple columns or tables. For example, if you often create reports using data from multiple columns, a covering index could include all those columns to speed up the process.

For organizations managing large-scale data processing tasks, such as those involving HPC batch jobs, implementing covering indexes can significantly improve query performance, especially when dealing with complex queries across multiple columns or tables.

Another crucial consideration for database optimization is ensuring smooth operations during critical periods, such as website launches. Utilizing a comprehensive website launch checklist can help ensure that your database infrastructure is adequately prepared to handle increased traffic and demands on query performance during such events.

6. Partial indexes

When a subset of data is frequently queried, partial indexes can be created to cover only that subset, reducing the index size and improving query performance. An example is creating a partial index for active users in a user table where only rows with “active = true” are indexed.

In cloud environments dealing with massive datasets, partial indexes can help you manage resources more efficiently and maintain optimal performance. What is cloud native architecture? This refers to apps built specifically to work well in cloud environments. It involves using cloud services and concepts like microservices, containerization, and orchestration. It’s frequently used for apps that need to perform in an agile environment and be quickly scaled up or down.

7. Expression indexes

These indexes are created based on expressions or functions applied to one or more columns. They are useful for queries involving computed values or transformations. For example, indexing the result of a mathematical operation or string concatenation performed on columns.

8. Hash indexes

Particularly useful for equality comparisons, hash indexes can provide fast access to data with low cardinality columns or when accessing a large number of rows randomly. They are suitable for scenarios like indexing boolean or enumerated columns.

Database indexing – optimize database performance

In database management, optimizing queries is key to ensuring your database performs well across all platforms, from web to mobile. To do this, you need a solid indexing strategy.

Choosing the right database index can directly impact business operations. When your database is well-organized, it means employees and users can find what they need quickly, leading to tangible benefits from improved response times to streamlined operations and reduced costs.

Understanding the different approaches and best practices means you’ll be better equipped to streamline your data and manage it efficiently.

Pohan Lin is the Senior Web Marketing and Localizations Manager at Databricks, a global Data and AI provider connecting the features of data warehouses and data lakes to create lakehouse architecture. With over 18 years of experience in web marketing, online SaaS business, and ecommerce growth. Pohan is passionate about innovation and is dedicated to communicating the significant impact data has in marketing. Pohan has written for other domains such as Spiceworks and Parcel Monitor. Here is his LinkedIn.