Businesses are increasingly looking to offshore DevOps teams to optimize their software development processes in today’s fast-paced digital market. While there are many advantages to this approach, such as lower costs and easier access to a worldwide labor pool, there are some disadvantages as well. How can these obstacles be overcome to ensure productive teamwork and successful project completion? Let’s examine some workable solutions and discuss the challenges of collaborating with offshore DevOps teams.

Understanding Offshore DevOps

The integration of development and operations methods in a geographically dispersed configuration is recognized as offshore DevOps. Through the use of international talent pools, offshore DevOps optimizes software development, deployment, and maintenance procedures, frequently leading to cost savings and round-the-clock production. Through the implementation of sophisticated communication technologies and strong management protocols, organizations may effectively address issues pertaining to time zone variations and cultural discrepancies, guaranteeing smooth cooperation and superior results. With this strategy, companies can improve scalability, quicken their development cycles, and hold onto their competitive advantages in the ever evolving IT sector.

Benefits of Offshore DevOps

Embracing offshore DevOps has many benefits that can make a big difference for a business. Cost effectiveness is one of the main justifications. Salary and operating expense savings are significant because offshore areas frequently have less labor costs than onshore ones. The lower overhead expenses of maintaining office buildings and equipment in expensive locations further contribute to this.

Another strong argument is having access to a wider pool of talent. Many highly qualified and seasoned DevOps specialists with extensive knowledge of the newest tools and technologies can be found in offshore regions. In addition to giving businesses access to specialized knowledge that could be hard to come by in their native nation, this access enables them to take advantage of a variety of creative ideas and abilities.

Moreover, offshore DevOps enables 24/7 operations. Companies can maintain continuous development and operations by having teams operating in multiple time zones. This results in speedier turnaround times and a more prompt response to concerns. Reducing downtime and enhancing service reliability require this 24/7 capability. So the opportunity to hire DevOps specialists from offshore regions allows companies to tap into a wider talent pool.

Two more significant benefits are scalability and flexibility. By scaling their DevOps resources up or down according to project demands, organizations can avoid the long-term obligations associated with recruiting full-time professionals. This adaptability makes it possible to quickly adapt to modifications in the market or project needs, ensuring that resources are employed efficiently.

To aid focus on important business processes, offshore teams may be given routine DevOps tasks. By focusing on strategic projects, internal teams are able to increase productivity and innovation through delegation. As a result, businesses can shorten development cycles and launch products more quickly by utilizing cost reductions, ongoing operations, and a varied talent pool.

Furthermore, offshore workers foster creativity and provide a worldwide perspective. Diverse viewpoints and approaches from many fields can foster innovation and yield superior outcomes. Being exposed to worldwide best practices improves the overall quality and efficacy of DevOps processes.

And lastly, offshore helps lower risk. There is geographic diversity, which enhances company continuity and catastrophe recovery plans. Reducing its reliance on a single location or team can help the business guard against a range of threats, including natural disasters and localized disruptions.

In summary, the key benefits of venturing into offshore DevOps include (These advantages collectively contribute to a company’s competitive edge and overall success.):

- Cost efficiency

- Access to a larger talent pool

- 24/7 operations

- Scalability and flexibility

- Enhanced focus on core business

- Accelerated time-to-market

- Global perspective and innovation

- Risk mitigation

Additionally, offshore DevOps is not limited to a single industry but finds application across various sectors, that’s why offshore DevOps is so widespread. From healthcare to finance, e-commerce to telecommunications, and manufacturing to entertainment, offshore DevOps practices have become indispensable for driving innovation, optimizing processes, and maintaining competitiveness in today’s digital age.

In the healthcare industry, where data security, regulatory compliance, and operational efficiency are paramount, offshore DevOps plays a crucial role. Specialized DevOps solutions tailored, such as Salesforce DevOps for healthcare streamline operations, improve patient care delivery, and ensure compliance with stringent regulations like HIPAA.

In the finance sector, offshore DevOps teams are instrumental in implementing robust security measures, enhancing transaction processing speeds, and improving customer experience. Financial institutions leverage DevOps practices to accelerate software development cycles, launch new financial products, and adapt to rapidly evolving market trends.

E-commerce companies rely on offshore DevOps solutions to enhance website performance, manage high volumes of online transactions, and personalize customer experiences. DevOps practices enable e-commerce businesses to rapidly deploy updates, optimize digital marketing campaigns, and ensure seamless integration with third-party platforms.

Common Challenges in Offshore Devops

Implementing DevOps in an offshore setting can provide significant benefits such as cost savings, access to a larger talent pool, and 24/7 productivity due to time zone differences, but despite the benefits, several challenges can impede the success of offshore DevOps collaborations.

Here are some common challenges of offshore DevOps:

Communication Barriers

Effective communication is the cornerstone of any successful project. However, working with offshore teams can often lead to misunderstandings and miscommunications. Language barriers, different communication styles, and varying levels of English proficiency can complicate interactions.

To overcome these barriers:

- Use Clear and Simple Language: Avoid jargon and technical terms that may not be universally understood.

- Regular Meetings: Schedule regular video calls to ensure face-to-face interaction and clarity.

- Documentation: Maintain detailed and accessible project documentation.

Time Zone Differences

Working across different time zones can be a double-edged sword. While it allows for continuous progress, it can also lead to delays and coordination issues.

Here are some strategies to manage time zone differences:

- Overlap Hours: Identify a few hours each day when all team members are available.

- Flexible Scheduling: Allow team members to adjust their work hours for better overlap.

- Asynchronous Communication: Use tools that support asynchronous work, allowing team members to contribute at different times.

Cultural Differences

Cultural differences can affect teamwork and collaboration. Different work ethics, attitudes towards hierarchy, and communication styles can lead to misunderstandings.

To bridge cultural gaps:

- Cultural Training: Provide training for team members to understand each other’s cultural backgrounds.

- Cultural Liaisons: Appoint liaisons who can help navigate cultural differences.

- Inclusive Environment: Foster an environment of inclusivity and respect for all cultures.

Managing Quality and Consistency

Maintaining consistent quality across different teams is challenging in an offshore setup. Ensuring that all teams adhere to the same standards and practices requires robust quality control mechanisms. Providing real-time feedback and conducting performance reviews also become more complex with offshore teams.

To maintain high quality:

- Standardized Processes: Implement standardized development and testing processes.

- Regular Audits: Conduct regular audits and code reviews.

- Quality Metrics: Establish clear quality metrics and KPIs.

Ensuring Security and Compliance

Offshore DevOps teams often handle sensitive data, raising significant security and privacy concerns. Ensuring data privacy and compliance with local regulations can be challenging. Protecting intellectual property and preventing data leaks or misuse is also a major concern.

To enhance security:

- Data Protection Policies: Implement stringent data protection policies.

- Compliance Training: Provide regular training on compliance standards.

- Secure Tools: Use secure communication and collaboration tools.

Building Trust and Transparency

Trust is the foundation of any successful partnership. Building trust with offshore teams can be challenging but is essential for long-term success.

To build trust:

- Transparency: Maintain transparency in all dealings and communications.

- Regular Updates: Provide regular project updates and feedback.

- Mutual Respect: Cultivate mutual respect and understanding.

Effective Collaboration Tools

Ensuring that all teams use compatible and effective tools for integration, communication, and collaboration is essential but challenging. Providing secure and reliable access to necessary resources and tools for offshore teams can be problematic, leading to integration issues and performance bottlenecks.

Some effective collaboration tools include:

- Project Management Tools: Tools like Jira, Trello, and Asana help track progress and manage tasks.

- Communication Tools: Slack, Microsoft Teams, and Zoom facilitate communication.

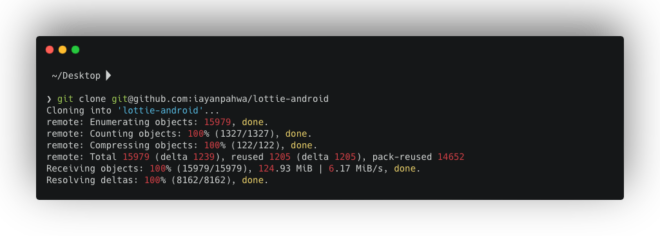

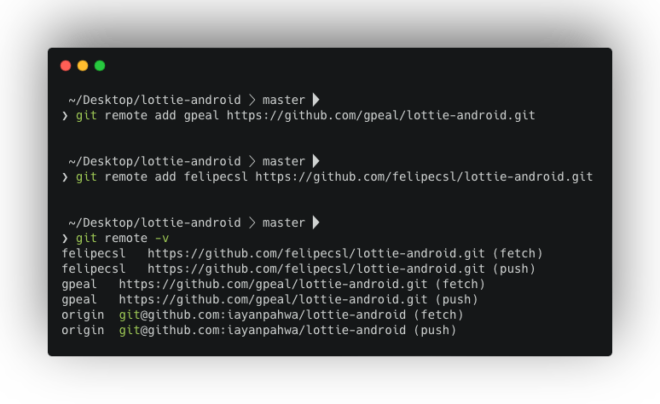

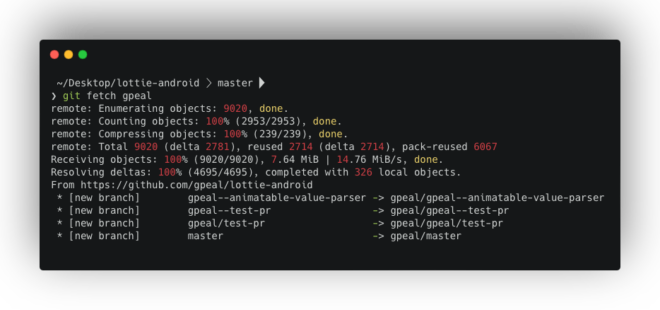

- Version Control Systems: GitHub and GitLab ensure version control and collaboration on code.

Strategies to Mitigate Challenges in Offshore Devops

Handling the Offshore DevOps complexity requires a multifaceted, all-encompassing approach. Fostering efficient communication that crosses regional boundaries to guarantee smooth collaboration is essential to success. Training in cultural sensitivity is essential for promoting understanding and unity among a diverse workforce. It is important to have strong security measures in place to protect sensitive data from constantly changing cyber threats. Maintaining the integrity of deliverables through consistent quality assurance procedures builds client trust. Agile project management techniques guarantee on-time delivery by optimizing procedures. Team building exercises foster a spirit of cooperation by bringing disparate teams together. Investing in skill development and training enables team members to adjust to rapidly changing technologies. Using excellent collaboration tools promotes effective coordination and information sharing, which boosts output and achievement.

To address these challenges, organizations can implement various strategies:

- Enhanced Communication

- Cultural Sensitivity Training:

- Robust Security Measures

- Consistent Quality Assurance

- Effective Project Management

- Team Building Activities

Other strategies include:

- Training and Skill Development:

Continuous learning and skill development are crucial for keeping up with the fast-paced tech industry. To promote skill development:

- Training Programs: Offer regular training and upskilling programs.

- Knowledge Sharing: Encourage knowledge sharing through webinars and workshops.

- Certifications: Support team members in obtaining relevant certifications.

- Effective Collaboration Tools:

Ensuring that all teams use compatible and effective tools for integration, communication, and collaboration is essential but challenging. Providing secure and reliable access to necessary resources and tools for offshore teams can be problematic, leading to integration issues and performance bottlenecks.

Some effective collaboration tools include:

- Project Management Tools: Tools like Jira, Trello, and Asana help track progress and manage tasks.

- Communication Tools: Slack, Microsoft Teams, and Zoom facilitate communication.

- Version Control Systems: GitHub and GitLab ensure version control and collaboration on code.

Future Trends in Offshore DevOps

As the landscape of technology continues to evolve, offshore DevOps is expected to undergo significant transformations. Several trends are emerging that promise to shape the future of Devops field.

Some emerging trends include:

- AI and Automation: The integration of AI in DevOps and machine learning will enhance predictive analytics, enabling proactive management of systems and more efficient troubleshooting.

- Remote Work: Offshore DevOps will use dispersed team management techniques and virtual environments to more fully integrate remote work practices as it becomes more common.

- Collaboration Tools and Platforms: Improved collaboration technologies will help geographically scattered teams communicate and coordinate more effectively, which will promote a more unified workflow.

- Advanced Security Measures: Enhanced security processes and safeguards are known as advanced security measures.Offshore DevOps teams will implement increasingly complex security procedures, such as automated compliance checks and sophisticated encryption techniques, in response to the increase in cyberattacks.

Conclusion

In conclusion, offshore DevOps offers a strong option for companies looking to improve their software development workflows and obtain a leg up in the fast-paced industry of today. The advantages are obvious; they include improved scalability, 24/7 operations, and cost-effectiveness as well as access to a larger talent pool. But managing the difficulties that come with working remotely is essential to making sure that the partnership is successful.

Organizations face a variety of obstacles, including those related to creating trust, time zone differences, cultural disparities, preserving quality and consistency, and guaranteeing security and compliance. Techniques like improved communication, training for cultural sensitivity, strong security protocols, reliable quality control, efficient project administration, and team-building exercises can lessen these difficulties and promote fruitful cooperation.

To further improve operational efficiency and innovation, consider making investments in training and skill development, embracing efficient collaboration technologies, and keeping up with emerging trends in offshore DevOps. Offshore DevOps will continue to be essential to the success of companies in a variety of industries as the landscape changes with trends like artificial intelligence and automation, remote work, sophisticated communication platforms, and increased security measures.

In summary, companies can fully utilize offshore DevOps to spur innovation, streamline operations, and preserve competitiveness in the rapidly changing digital landscape by comprehending and skillfully resolving the associated risks as well as utilizing the advantages.