A variety of mobile devices have flooded the Web in the past years. To no one’s surprise, Google announced that starting April 21st, they’ll expand the use of mobile-friendliness as a mobile ranking signal, in fact penalizing all sites that don’t have a mobile strategy – dubbed “Mobilegeddon” in the recent press.

Furthermore, Google just paid $25 million for exclusive rights to the “.app” top-level domain. Although the company hasn’t yet announced any specific plans for .app, this could be the signal that the Mobile Web will evolve beyond Responsive Web Design (RWD) and lean more towards rich UX/UI and mimicking of the native environment.

Presumably, [tweetable]the update to their algorithm will have a significant impact on Google’s mobile search results[/tweetable]. If you’re like me, you’d want to go all the way and find out the specifics: what is the current distribution of mobile web strategies, how many websites will be impacted by this change and to what degree? If you already have a mobile web strategy in place or just started developing one, you’d probably also want to know how to optimize it: how “lengthy” should your pages be or how “heavy”? And what Google’s PageSpeed Insights has to says about it?

We have searched the answers to these questions by analyzing the top 10,000 Alexa sites, from 5 different categories: News, E-commerce, Tech, Business and Sports. What we discovered was no less surprising and we felt like this is something worth sharing with the community. Before diving into the data let me tell you a few words on the methodology.

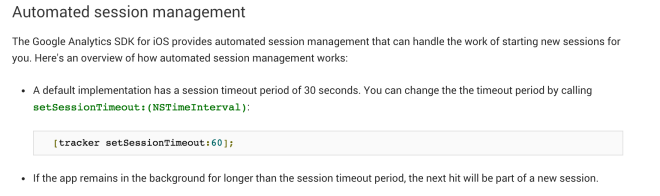

As per Google’s guidelines, we have taken into consideration three types of configurations for building mobile sites:

Google recognizes three different configurations for building mobile sites. (Google Developers)

- Responsive web design: serving the same HTML code on the same URL, regardless of the users’ device; render the display differently based on the screen size.

- Dynamic serving (also known as adaptive): using the same URL regardless of device, but generating HTML code dynamically by detecting the browser’s User Agent

- Separate URLs (also known as mobile friendly): serving different code to each device and a separate web address for the mobile version

To detect the strategy used by different websites, we have crawled them using an iPhone User Agent.

Mozilla/5.0 (iPhone; CPU iPhone OS 5_0 like Mac OS X) AppleWebKit/534.46 (KHTML, like Gecko) Version/5.1 Mobile/9A334 Safari/7534.48.3

On top of that, we also identified those sites that implemented a Smart App Banner meta tag in the head of their home page, to market their iOS app:

<meta name="apple-itunes-app" content="app-id=myAppStoreID">

For analyzing the performance, we have used the PageSpeed Insights API, which takes into consideration not only the size of a webpage, but also compression, server response time, browser caching and landing page redirects, among others.

We also wanted to see how long mobile pages have become. Since RWD relies heavily on scrolling, we asked ourselves if this is an effective way to display content and what can be considered an optimum page height. Throughout the rest of the article, you’ll see references to “X5” height rate, which means that a site’s main page height is 5 times the height of the viewport on iPhone 6 (375 x 667). This is an indication of how much scroll needs to be done in order to reach the last piece of content.

Overview of Mobile Strategies on the Web

It’s 2015 and if you think that RWD is winning over mobile friendly sites (separate URLs), you’re spot on. Nearly 28% of the sites we’ve researched are responsive, while 26% have opted for a separate mobile address. The gap is indeed there, but it’s not as wide as we might have assumed. The surprising fact is that 40% of the researched sites have no mobile web strategy whatsoever! To me, that’s HUGE! Since Google’s new algorithm update is just around the corner, we can easily imagine that ignoring mobile users will no longer be an option.

Deeply buried in the data, we’ve also found another interesting fact: approximately 4% considered that being adaptive (serving different content under the same web address) is the right strategy for their mobile web presence.

2% of the researched websites have chosen to target their mobile users through applications (iOS). The number may in fact be bigger, but the inconsistency in promoting their apps makes them difficult to track.

Let’s dig a little bit more and see the variations between different categories and the impact that each strategy has on the overall mobile experience.

Newspapers – resistive as always

Being “stuck in the past” seems to be rightly attributed to the publishing industry, especially to newspapers: 38% of the websites from this category are mobile-friendly (separate URLs) and only 25% responsive.

In the PageSpeed Insights (PSI) results, adaptive comes on top with 75% scoring between 40 and 60 (although only 15% are above 60). Interestingly enough, mobile-friendly sites score even better (19% with PSI > 60), while only 11% of responsive sites have a score higher than 60. In fact, most of the sites that score under 20 are responsive (10%).

Wait, what? I thought Google loves responsiveness, right? There are countless articles about how Google recommends RWD as the best way to target mobile users. Surely, something doesn’t add up!

Or maybe most of the responsive sites don’t comply with Google’s own recommendations. A plausible explanation might be that developers have mistaken Google’s love for good mobile experience with the love for responsiveness, thus taking Google’s guidelines for granted. Instead of taking advantage of RWD, they end up producing sites that score poorly on all aspects.

When looking at sites’ height distribution on mobile devices, we see that the average for mobile-friendly ones is X8, which is pretty close to the X7 average rate represented by adaptive sites. However, 54% of adaptive sites have a less than X5 height rate.

In all fairness, “old” doesn’t necessarily mean wrong for newspapers sites and this time it might be working in their favor: 47% of responsive sites have a X10 – X15 height rate and 14% even over X15, which means that mobile users have to do a lot of scrolling before reading a certain piece of content.

So why are mobile friendly pages shorter? Did they notice that not so “lengthy” pages are more suitable for reading content on mobile? Is that the reason behind choosing mobile friendly (separate URLs) over responsiveness? But if that’s the case, why not going all the way towards adaptive? They’re doing a better job in terms of producing closer to optimum mobile pages (averaged at 2.5MB for both mobile-friendly and adaptive, compared to 3.7 MB for responsive) so perhaps it’s the ease of managing a separate mobile site all together.

Looking at Alexa’s popularity ranks, we notice that as the rank decreases, the number of responsive websites grows. The majority of sites with a low popularity rank are in fact responsive (14%), while 53% of the analyzed adaptive sites place themselves among the top ranked ones.

Tech sites – leaning towards RWD

In contrast with the above findings, responsive sites predominate in the “Tech” category (33%), while only 12% are mobile friendly (separate URLs).

In addition to that, 58% have page sizes under 2MB and consequently – a higher score on PageSpeed Insights: 51% mark over 60 and only 1.5% under 20. A page size of 2MB might sound a bit too much, but in fact, caching and other factors are influencing the overall PSI score.

When comparing the results to the newspapers category, tech websites show a major improvement. Yes, responsive sites still have the biggest height ratio (X6), but the average is significantly lower than what we’ve found earlier (X8).

Unfortunately, the “Tech” category also has the biggest percentage of sites that have NO mobile strategy whatsoever: 51%. It would seem that we have either modern responsive sites or nothing at all.

Overall, adaptive sites are still the most efficient in terms of score, but responsive sites are stealing the 1st place when it comes to height ratio, with a slightly bigger percentage of websites having a height rate of X5.

E-commerce sites fare better than most

For the “Shopping” category, mobile-friendly (separate URLs) and responsive sites are at a tie: 26%. Surprisingly though, adaptive e-commerce sites scored the biggest percentage of all categories: 6%. What would cause e-commerce sites to lean towards adaptive?

One plausible reason can be their appetite for improving conversion rates, thus their attention to optimize everything related to the buying funnel, which is influencing their mobile strategy as well.

If we analyze the height distribution for all three configurations (separate mobile URLs, responsive and adaptive), we see a pattern emerging: e-commerce sites, regardless of their mobile strategy, have the biggest percentages for under an X5 height rate: 90%, 58% and respectively 81%.

One could speculate that the reason for keeping their pages shorter is related to the influence that a lower height ratio might have on conversion rates. On top of that, PageSpeed Insights offers the highest score for all three mobile strategies: ~90% of mobile friendly/adaptive sites and 75% of the responsive ones have scored at least 40 points.

[tweetable]Clearly, e-commerce sites are doing a great job at optimizing for mobile[/tweetable] and, regardless of their favorite strategy (adaptive sites seem to be leading by a low margin), they’re mastering it like no other.

Apps – still luxurious game to play

Among other categories, we’ve found that mobile applications are appealing too: close to 6% of sites from “Business” and “Sports” have created their own iOS application, even if most of these previously opted for mobile friendly sites: 9% respectively 15%.

As a general rule, regardless of the category, if a site doesn’t have a mobile web strategy, chances are it won’t go for an application either. You’d expect it to be the other way around, but if a site owner ignored all previous mobile web strategies at his disposal, would he really be open to a more laborious and expensive approach (apps)? Not really.

Optimum Height and Page Size on Mobile

Analyzing ~10,000 sites in various categories surfaced a couple of guidelines that you might want to take into consideration when implement your mobile web strategy. In essence, what’s the maximum height and page size that your mobile friendly, responsive or adaptive site should have to ensure a 50+ PageSpeed Score?

If we analyze page sizes less than 2M, we realize that mobile-friendly sites, particularly in the Sports category, come on top with little over 91%. We also notice that only 30% of responsive sites score over 50 on PageSpeed Insights. Again, just keeping the page size at this level isn’t enough to ensure a high PSI score since caching can be equally important, but it’s a good place to start.

Interestingly enough, the “News” category is the only one where the majority (52%) of the adaptive sites with scores over 50 has page sizes between 2MB – 4MB. Even if adaptive sites seem to be able to “carry” more weight, their height rate should be kept at the minimum (X5) to ensure a good score.

Applying the same logic to height rates, clearly X5 seem to be the optimum rate for scoring 50+ on PageSpeed Insights. Once more, responsive sites seem to have the least chances of scoring over 50, even if they aim for a lower height: 62%.

Is the Mobile Web Heading Towards “Appification”?

[tweetable]Today, mobile web consumption occurs mostly from in-app browsers[/tweetable]. Just look for example at the Facebook app, that used to open links by using an external browser. Now, they have embedded a browser directly in the app and it makes sense – they don’t want their users to have a broken experience.

With that in mind, shouldn’t mobile websites offer a more app-like experience? Isn’t the linear scrolling experience we see on responsive sites a bit outdated?

In all fairness, what we’ve concluded thus far doesn’t take into account various UI/UX aspects and doesn’t answer some critical questions: When scrolling becomes too much for a mobile site? What’s the impact of having a long scroll and is this the reason for poor mobile reading experience on the web? What else can we do to ensure that mobile users have a good experience on the web?

That’s why in the second phase of the study we’ll analyze how much scrolling is actually being done on a responsive page, on mobile devices. After gathering relevant information on how mobile users interact with responsive sites we’ll be able to complete the next phase of the study by answering some key questions:

- What’s the mobile device and browser they’re using for accessing a site?

- How much time they spend on a particular page?

- What’s the maximum scroll height they reached on a site?

From “Nice To Have” To Mandatory

We’ve seen that 40% of the top 10,000 Alexa sites from 5 categories (News, Tech, E-commerce, Business, Sports) don’t do anything when it comes to their mobile users. However, we’re in the middle of a big shift: [tweetable]having a mobile strategy on the web is no longer just “nice to have”[/tweetable]. If we take into consideration the impending change of Google’s mobile ranking algorithm, we can conclude that at this point any mobile web strategy is a good strategy.

As far as this first part of our study is concerned, from a technical point of view, a page that fits within 2MB and has a height rate of X5 has a good chance to score 50+ on Google’s PageSpeed Insights. Although adaptive websites are overall the most efficient ones regarding these aspects, they are not very popular either. Even though the study clearly shows that RWD is far from being optimum, responsiveness is the leading strategy adopted when targeting mobile users.

If we add to the mix the “.app” top-level web domain bought by Google, the line between native apps and web apps is getting thinner and thinner. The Mobile Web is already evolving beyond responsiveness into something new and exciting where everything is an app instead of a site, where user interactions are more important than just page views and ultimately where all apps are interlinked into a Web of apps.

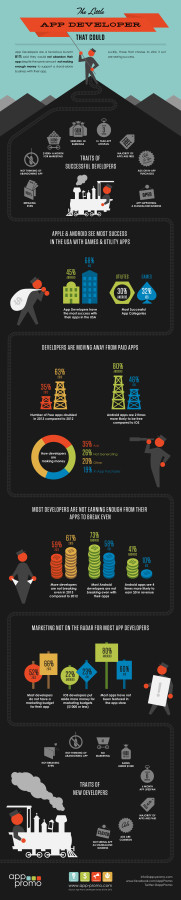

If you are interested in learning more about mobile app development read the third post of our series on Mobile App Marketing, on Business Models.